Ankur Saxena (Investment Director) | TDK Ventures

Introduction

Beyond a doubt, we are living in the age of digital transformation. Cars are beginning to self-drive, drones chart their own paths through winding landscapes and gusting wind, factories monitor themselves, and robots are advancing to work alongside human beings. While the creative software, computers, algorithms, and — of course — AI, behind these innovations are astounding, what remains a key differentiator is the ability to operate on the edge — delivering compute performance locally, quickly, and within tight power budgets. TDK Ventures has long prioritized edge intelligence, both for its resonance with TDK’s sensor, power, and connectivity businesses and for its ability to democratize AI beyond the hyperscale cloud.

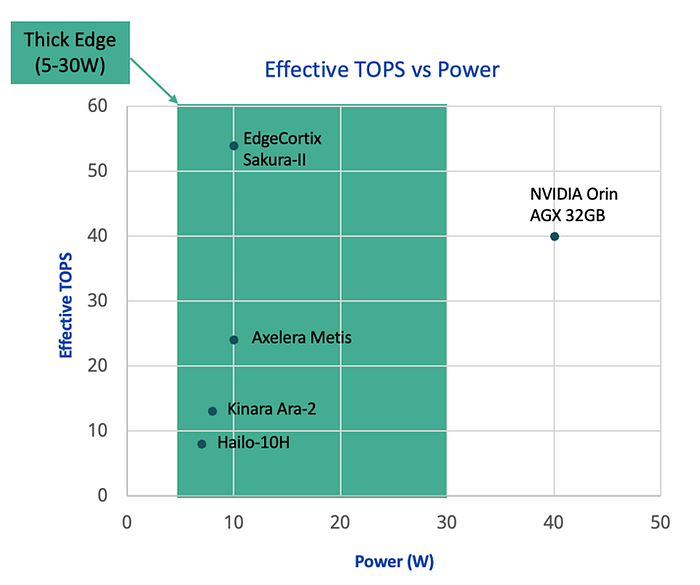

While edge compute has been an active area of development for decades now, through our recent deep explorations, we have seen a gap in the 5–30 W segment: too heavy for “tiny ML,” too latency-critical for cloud, and inelegant for repurposed mobile SoCs or datacenter-first GPUs. In this gap we see an opportunity, particularly as recent advances in hardware, models, software, and demand have converged. Timed almost perfectly, EdgeCortix has emerged with the right architecture — and, crucially, the right software — to lead. This is why we invested — to enable the scaling of a new class of thick-edge compute AI.

Figure 1. The category of Thick Edge compute in the landscape of power and TOPS requirements.

Now is the time to invest in Thick Edge AI

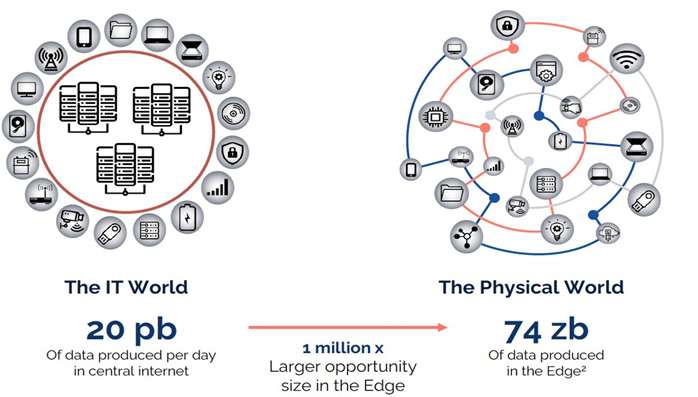

At its core, a shift towards AI at the edge represents a shift from the IT world to the physical world, and with that, an opportunity to democratize AI and support the more than 74 zb worth of data expected to come with it. As it stands, Thick Edge AI — roughly the 5–30 W envelope — is tracking toward a ~$20B opportunity by 2029 at ~35% CAGR, pulled by funded, near-term roadmaps in mobility, industrial automation, robotics, and space [1–3].

Figure 2. The shift to data at the edge represents a 6 orders of magnitude increase in data opportunities.

In addition to market signals, what makes the timing now to invest so ideal is the convergence of hardware and software innovations that can at last deliver at the edge. For years the cloud enforced a compute bottleneck requiring round-trip calculations to distant data centers, costing hundreds of milliseconds. New hardware has moved inference to the device, collapsing response time to single-digit milliseconds or less — while conveniently increasing the security of in-app data (location, biometrics, etc.) by keeping it in one secure location [4, 5].

Meanwhile, the supply side is also ready. Low-power NPUs, edge accelerators, and chiplet-based designs are shipping in developer and production form, with sub-10 nm nodes and advanced packaging now economically viable for edge use cases. On the model side, post-training quantization, pruning, distillation, sparsity, and compact architectures (MobileNet, EfficientNet-Lite, and small transformer variants) deliver state-of-the-art accuracy at tiny footprints. Add in supportive ecosystems — national programs like Japan’s NEDO (New Energy and Industrial Technology Development Organization), the U.S CHIPS Act, and Horizon Europe providing non-dilutive capital, plus mature deployment paths via TensorFlow Lite, PyTorch Mobile/ExecuTorch, and ONNX Runtime [6–8] — and a competitive window opens: incumbents dominate datacenter and mobile SoCs, but the fragmented Thick Edge landscape leaves room for focused startups to win early design-ins and compound from there. The PC ecosystem’s Copilot+ class of NPUs, delivering 40+ TOPS within laptop power budgets, underscores how quickly on-device AI is becoming the default execution target rather than the exception [9]. Under the hood, new packaging and chiplets are also changing the game. Standards like UCIe make it practical to mix and match chiplets across nodes and vendors, trimming cost and time-to-market, while advanced packaging ramps up to support bandwidth-hungry AI parts [10], [11].

The real moat in edge AI is software

Hardware advantages diminish fast. Manufacturing lead, process node, and even microarchitectural tricks are hard to defend for long. Software, by contrast, compounds. A strong compiler and runtime can deliver 2–5x uplift on the same silicon through better mapping and scheduling, and it travels across SKUs and generations. The sticky parts of the stack — model optimization flows, quantization/distillation pipelines, pre-optimized model libraries, SDKs and APIs, and orchestration layers — create developer lock-in and network effects. The platforms that win enable developers to bring standard ONNX, TensorFlow, or PyTorch models, hit “build,” and get high utilization on constrained hardware without hand-tuning every kernel. It’s why developer ecosystems like CUDA became strategic moats for GPUs, and why Microsoft’s Copilot+ PCs hinge as much on the software stack and tooling as on the NPU blocks themselves [9], [12], [13].

Analysts expect edge AI software to grow faster than many hardware segments this decade, reinforcing our thesis that the durable value in Thick Edge will accrue to those who solve the developer experience end-to-end and make heterogeneity an asset, not a tax [1].

Our “Hill”: Thick Edge (5–30 W)

We frame our investment theses in terms of “Hills”, in order to set perspective for a “King of the Hill” search. In this case, our hill is Thick Edge compute.

We start with what we know. In this case, we know the 5–30 W segment is a large and fast-growing market with clear ROI in industrial vision, autonomy, robotics, and space. It’s fragmented on both hardware and software aspects, leaving room for focused players to establish category leadership. Policy tailwinds — from the U.S. CHIPS Act to Japan’s NEDO programs to EU digital and Horizon initiatives — are injecting non-dilutive capital into edge silicon R&D and commercialization [10], [11]. In contrast, what we don’t know is how long a given hardware advantage will shield a startup in addition to the nuanced and complex variability of the hybrid skills necessary for edge AI.

From this dichotomy, we believe Thick Edge is more attractive than the sub-1 W “Tiny Edge” markets (which suffer from low average selling prices and long sales cycles) or the 1–3 W mobile market (where incumbents have deeply entrenched SoCs). Fragmentation creates whitespace for integrated solutions that pair reconfigurable silicon with first-class compilers and runtimes. Teams that lean into policy leverage (CHIPS/NEDO/EU) will do more with less equity. Go-to-market will favor vertical beachheads with immediate ROI — industrial, robotics, telecom/IT at the edge — followed by broader horizontal expansion. M&A appetite from industrial OEMs, hyperscalers, and semiconductor majors is likely to remain robust as edge inference becomes “table stakes” in product lines.

From all this, we believe a King of the Hill will exhibit the following KPIs:

- Hardware and software tuned for the 5–30 W envelope with >3.0 eTOPS/W on representative, real-time models

- >10 customer deployments where bake-offs beat incumbents

- quantified ROI across at least five verticals

- a veteran team across silicon and ML software with multi-vertical GTM chops

- capital-efficient scaling, including non-dilutive funding

Meet EdgeCortix

Figure 3. TDK Ventures meeting the incredible EdgeCortix team.

We are honored to say after significant exploration and diligence in the landscape that we believe our friends and partners at EdgeCortix (HQ: Tokyo) to be our King of the Hill bet for Thick Edge compute. EdgeCortix is an edge-first AI compute company with an end-to-end platform: a software-first compiler/runtime stack (MERA) and a family of reconfigurable NPUs (Dynamic Neural Accelerator, “DNA”) delivered in the SAKURA-II line of co-processors, modules, and cards. From inception, the team chose to build the compiler first, followed by tailored hardware design to deliver optimum hardware/software compatibility and superior performance.

From a product standpoint, MERA unifies model ingestion (ONNX/TensorFlow/PyTorch), calibration, quantization, graph optimization, code generation, and runtime across heterogeneous systems. It can target NPUs, CPUs, GPUs, FPGAs, MCUs, and select third-party accelerators, enabling “write once, deploy many” with specialization preserved per target [14], [15]. The SAKURA-II devices, built on TSMC 12 nm, are generative-AI-capable within edge budgets and available as M.2 modules and low-profile PCIe cards to ease system integration across diverse x86 and Arm platforms like the Raspberry Pi 5 [16], [17]. Their Dynamic Neural Accelerator uses a patented, runtime-reconfigurable data path that adapts to CNNs, RNNs, and transformer-style attention to keep utilization high at batch-of-one, streaming workloads. The result is high effective throughput per watt under realistic conditions — the KPI that matters at the edge [18], [19].

EdgeCortix was named a World Economic Forum Technology Pioneer in 2024 and won Electronic Products’ Product of the Year (Digital ICs) for SAKURA-II in 2024, alongside the Japan-U.S. Innovation Award recognition the same year [20]–[21]. The company announced an initial close of its Series B in August 2025 and, critically, secured 7B JPY (~$49M) in non-dilutive NEDO/METI project support across late-2024 and 2025 to accelerate energy-efficient chiplet programs for edge inference and on-device learning [22], [23]. Partnerships such as Renesas (compiler integration across MCU/MPU portfolios) reinforce the “software-first” strategy and expand channel reach [24]. EdgeCortix has also highlighted aerospace/space readiness with radiation-tolerant performance of SAKURA-I and SAKURA-II as validated by several tests conducted by NASA — important for edge-in-space scenarios where local autonomy is essential [25].

Their key differentiators include:

- Software-first, co-designed with silicon. EdgeCortix treats software as the product and silicon as one target for it. MERA’s heterogeneous reach means customers can start on CPUs/GPUs/FPGAs already in-fleet and graduate to SAKURA when performance-per-watt or BOM costs become critical. This de-risks adoption: developers don’t have to commit to a new chip to validate their model and pipeline [17], [18].

- A reconfigurable NPU built for batch-of-one. Thick Edge systems are dominated by streaming, batch-1 workloads — cameras, lidars, motors, links — where real-time latency trumps raw throughput. DNA’s runtime-reconfigurable data path is tuned for sustained utilization on such workloads, minimizing memory stalls and keeping compute busy even as layers and operator patterns change. That translates directly into effective TOPS per W advantages on real-world models, not just marketing slides [21], [22].

- Gen-AI readiness at the edge. The next wave of edge intelligence is not just vision CNNs — it’s small transformers, distillation-based LLMs, and multimodal pipelines. SAKURA-II’s memory subsystem and MERA’s graph optimizations allow developers to run compact generative models locally; the company has demonstrated low-power GenAI on Arm hosts, including Raspberry Pi 5, a powerful democratization signal [19], [20].

- Heterogeneous integration reduces friction. Many edge programs inherit a zoo of processors. MERA’s ability to target NPUs, CPUs, GPUs, FPGAs, and MCUs gives systems teams a path to standardize toolchains without ripping and replacing deployed hardware [17], [18]. This isn’t just a convenience — it’s a procurement moat. Once models and pipelines are tied into a stack, switching costs are high, and continuous improvements in the compiler accrue to customers over time.

- Chiplet scalability and packaging tailwinds. EdgeCortix is already executing on energy-efficient chiplet programs with NEDO backing, aligning to the industry’s move toward modular silicon connected by standards like UCIe. Chiplets let edge accelerators grow I/O and memory selectively without dragging compute into an unnecessarily expensive node, preserving unit economics in the 5–30 W class [10], [11], [27].

- Veteran team with edge-AI scars. The leadership spans AI research, compiler engineering, robotics development, and semiconductor productization across global roles. That matters in edge, where success depends on closing the loop between models, compilers, and hardware under real deployment constraints.

- Proof points across demanding verticals. From industrial vision and robotics to telecom and space, EdgeCortix’s portfolio shows the breadth to scale horizontally after initial beachheads. Awards from the WEF and Japan-U.S. Innovation community suggest both technical credibility and policy mindshare — valuable for public-private programs that accelerate adoption [19]–[22].

The metric that matters: Effective TOPS per Watt

Comparing performance in terms of TOPS seems to be commonplace; however, we find this to be a lacking indicator. Instead, we prefer to think in terms of “effective” TOPS per Watt — or the operations that can be sustained on a given workload, divided by measured device power. Achieving high eTOPS/W depends on compiler quality (operator placement, fusion, tiling), memory scheduling, sparsity exploitation, and batch-of-one optimizations for real-time use [23], [24], [25]. In terms of eTOPS/W, EdgeCortix again stands out as an industry leader particularly in the Thick Edge space.

Figure 4. EdgeCortix’s Sakura-II performance metrics in terms of eTOPS per W.

Why We Invested in EdgeCortix

At TDK Ventures, we invest in the pioneers who redefine what is possible for technology and how it can better our global future. Deep down, TDK Global is also an “edge” company at heart: sensors, power, connectivity, timing, storage — ingredients that become leverage when paired with on-device intelligence. EdgeCortix embodies both aspects reflected in ourselves — transforming edge computing from a fragmented, hardware-centric space into an integrated, software-defined ecosystem. Their software-first approach, grounded in compiler innovation and co-designed silicon, unlocks unprecedented efficiency and scalability in the 5–30 W Thick Edge segment. By bridging real-time AI inference with reconfigurable hardware, EdgeCortix is not only delivering market-leading performance per watt but also democratizing access to powerful AI where it’s needed most — on the factory floor, in autonomous machines, in space, and across the connected infrastructure that underpins modern life.

We believe this is precisely the right moment for such a company to scale. The convergence of hardware readiness, model optimization, and ecosystem tailwinds — from the CHIPS Act to Japan’s NEDO programs — has created fertile ground for edge AI acceleration. As incumbents focus on high-end datacenter and mobile SoCs, the Thick Edge remains the most compelling open frontier: vast in size, technically demanding, and strategically critical. EdgeCortix’s differentiated platform is already demonstrating that energy-efficient, software-driven inference can displace legacy architectures and enable new levels of autonomy, safety, and responsiveness in the physical world. Our investment in EdgeCortix reflects more than confidence in a product — it is a conviction in a movement. The edge is where intelligence meets reality, and where TDK’s decades of leadership in sensing, power, and connectivity find new expression through embedded AI. Together with EdgeCortix, we are advancing a shared mission to make intelligent systems faster, leaner, and more accessible. As the world races toward distributed AI, we believe EdgeCortix has the technology, team, and timing to become the definitive leader of the Thick Edge — and we are proud to stand beside them on that journey.

References

[1] TDK Ventures bottom-up market analysis

[2] MarketsandMarkets, “Edge AI Hardware Market Size, Share & Trends,” 2025. [Online]. Available: https://www.marketsandmarkets.com/Market-Reports/edge-ai-hardware-market-158498281.html (MarketsandMarkets)

[3] Grand View Research, “Edge AI Market Size, Share & Growth,” 2024. [Online]. Available: https://www.grandviewresearch.com/industry-analysis/edge-ai-market-report (Grand View Research)

[4] Kinara, “Optimizing Latency for Edge AI Deployments,” whitepaper, 2024. [Online]. Available: https://kinara.ai/wp-content/themes/kinara/files/Latency-on-the-Edge-WP-v2.pdf (Kinara, Inc.)

[5] J. Kilit, “Edge Computing and GDPR: A Technical Security and Legal Perspective,” Master’s Thesis, 2025. [Online]. Available: https://www.diva-portal.org/smash/get/diva2%3A1982107/FULLTEXT01.pdf (DIVA Portal)

[6] Google AI Edge, “LiteRT (formerly TensorFlow Lite) Overview,” May 19, 2025. [Online]. Available: https://ai.google.dev/edge/litert (Google AI for Developers)

[7] Meta, “ExecuTorch Documentation,” 2025. [Online]. Available: https://docs.pytorch.org/executorch/index.html (docs.pytorch.org)

[8] Microsoft, “ONNX Runtime Documentation,” 2025. [Online]. Available: https://onnxruntime.ai/docs/ (onnxruntime.ai)

[9] Microsoft, “Introducing Copilot+ PCs,” May 20, 2024. [Online]. Available: https://blogs.microsoft.com/blog/2024/05/20/introducing-copilot-pcs/ (The Official Microsoft Blog)

[10] UCIe Consortium, “UCIe Specifications,” 2024. [Online]. Available: https://www.uciexpress.org/specifications (UCIe Consortium)

[11] EdgeCortix, “NEDO Subsidy Announcements (4B JPY in Nov. 2024; 3B JPY in May 2025),” Press Releases. [Online]. Available: https://www.edgecortix.com/en/press-releases/edgecortix-receives-4-billion-yen-subsidy-from-japans-nedo and https://www.edgecortix.com/en/press-releases/edgecortix-awarded-new-3-billion-yen-nedo-project-to-develop-advanced-energy-efficient-ai-chiplet-for-edge-inference-and-learning (edgecortix.com)

[12] NVIDIA, “About CUDA,” 2025. [Online]. Available: https://developer.nvidia.com/about-cuda (NVIDIA Developer)

[13] NVIDIA, “CUDA Toolkit,” 2025. [Online]. Available: https://developer.nvidia.com/cuda-toolkit (NVIDIA Developer)

[14] EdgeCortix, “MERA Compiler and Software Framework,” Product Page, 2025. [Online]. Available: https://www.edgecortix.com/en/products/mera (edgecortix.com)

[15] EdgeCortix, “Edge AI Inference Products and Technology,” 2025. [Online]. Available: https://www.edgecortix.com/en/products (edgecortix.com)

[16] EdgeCortix, “SAKURA-II Energy-Efficient Edge AI Co-processor,” Product Page, 2025. [Online]. Available: https://www.edgecortix.com/en/products/sakura (edgecortix.com)

[17] EdgeCortix, “SAKURA-II Brings Low-Power Generative AI to Raspberry Pi 5 and other Arm-Based Platforms,” Press Release, Jun. 3, 2025. [Online]. Available: https://www.edgecortix.com/en/press-releases/edgecortixs-sakura-ii-ai-accelerator-brings-low-power-generative-ai-to-raspberry-pi-5-and-other-arm-based-platforms (edgecortix.com)

[18] EdgeCortix, “Dynamic Neural Accelerator (DNA) Architecture,” Product Page, 2025. [Online]. Available: https://www.edgecortix.com/en/products/dna (edgecortix.com)

[19] S. Dasgupta, “Dynamic Neural Accelerator for Reconfigurable & Energy-Efficient Edge AI,” Hot Chips Poster, 2021. [Online]. Available: https://hc33.hotchips.org/assets/program/posters/HC2021.EdgeCortix.Sakyasingha_Dasgupta_v02.pdf (hc33.hotchips.org)

[20] World Economic Forum, “Meet 2024’s Technology Pioneers,” Jun. 6, 2024. [Online]. Available: https://www.weforum.org/stories/2024/06/2024s-new-tech-pioneers-world-economic-forum/ (World Economic Forum)

[21] Electronic Products / EDN, “Winners of the 2024 Product of the Year Awards,” Jan. 31, 2025. [Online]. Available: https://www.edn.com/electronic-products-announces-winners-of-the-2024-product-of-the-year-awards/ (EDN)

[22] EdgeCortix, “EdgeCortix Awarded Japan-US Innovation Award 2024,” Press Release, 2024. [Online]. Available: https://www.edgecortix.com/en/press-releases/edgecortix-awarded-japan-us-innovation-award-2024 (edgecortix.com)

[23] MLCommons, “MLPerf Inference: Edge,” 2025. [Online]. Available: https://mlcommons.org/benchmarks/inference-edge/ and “MLPerf Inference v1.0 Results with First Power Measurements,” 2021. [Online]. Available: https://mlcommons.org/2021/04/mlperf-inference-v1-0-results-with-first-power-measurements/ (MLCommons)

[24] R. Tobiasz et al., “Edge Devices Inference Performance Comparison,” Journal of Computing Science and Engineering, vol. 17, no. 2, 2023. [Online]. Available: https://jcse.kiise.org/files/V17N2-02.pdf (jcse.kiise.org)

[25] Z. Li et al., “A Benchmark for ML Inference Latency on Mobile Devices,” EdgeSys’24, 2024. [Online]. Available: https://qed.usc.edu/paolieri/papers/2024_edgesys_mobile_inference_benchmark.pdf (qed.usc.edu)

[26] Renesas, “Renesas Partners with EdgeCortix to Streamline AI/ML Development,” Oct. 4, 2023. [Online]. Available: https://www.renesas.com/en/about/newsroom/renesas-partners-edgecortix-streamline-aiml-development (renesas.com)

[27] EdgeCortix, “SAKURA-I Demonstrates Robust Radiation Resilience for Orbital and Lunar Missions,” Press Release, Jan. 28, 2025. [Online]. Available: https://www.edgecortix.com/en/press-releases/edgecortix-sakura-i-ai-accelerator-demonstrates-robust-radiation-resilience-suitable-for-many-orbital-and-lunar-expeditions (edgecortix.com)